Qilimanjaro, IBM, HPE, QCI - The Week in Quantum Computing, November 10th, 2025

Issue #259

Quick Recap

The Spanish government invested €1 million in Qilimanjaro, supporting its scalable superconducting qubit systems and the launch of Europe’s first multimodal Quantum Data Center. Microsoft expanded its Denmark Quantum Lab—its largest quantum investment site—aiming to advance error-resilient topological qubits and support the upcoming Magne quantum computer. IBM made headlines by introducing its 120-qubit Nighthawk processor, the new Loon lattice architecture targeting fault tolerance by 2029, and an upgraded Qiskit library. At the Quantum Developer Conference 2025, IBM, alongside Flatiron, BlueQubit, and Algorithmiq, also launched the Quantum Advantage Tracker to bring rigor and transparency to quantum advantage claims. Industry alliances and research continued gaining momentum. HPE formed the Quantum Scaling Alliance with partners like Nvidia, Riverlane, and 1QBit, seeking to build hybrid quantum supercomputers. MIT launched the Quantum Initiative (QMIT), pledging collaboration with MIT Lincoln Laboratory and US government agencies to apply quantum solutions to real-world problems like security and materials science. In research, the release of CUNQA, an HPC-focused emulator for distributed quantum computing, and a collaborative study involving Rigetti and AWS on quantum-enhanced machine learning for financial prediction, underscored the push toward practical applications and infrastructure for quantum integration.

“We are going to figure out what real problems exist that we could approach with quantum tools, and work toward them in the next five years.”

— Danna Freedman, MIT

The Week in Quantum Computing

Paper: CUNQA: a Distributed Quantum Computing emulator for HPC

Researchers Jorge Vázquez-Pérez, Daniel Expósito-Patiño, Marta Losada, Álvaro Carballido, Andrés Gómez, and Tomás F. Pena have introduced CUNQA, an open-source emulator that enables Distributed Quantum Computing (DQC) research within high-performance computing (HPC) environments. Presented as the first tool to emulate all three DQC models—no-communication, classical-communication, and quantum-communication—in HPC, CUNQA lets users test and study DQC architectures before their physical realization. The team demonstrates CUNQA’s capabilities using the Quantum Phase Estimation (QPE) algorithm. This work is significant in 2025 because, as the field gravitates toward HPC integration and multi-QPU architectures, CUNQA provides a practical platform to explore and validate DQC concepts prior to the existence of real distributed quantum computers.

Spanish SETT invests €1M in Qilimanjaro

The Spanish Government, via the Sociedad Española para la Transformación Tecnológica (SETT), has invested €1 million in Barcelona-based quantum computing startup Qilimanjaro Quantum Tech, reinforcing national and European technological sovereignty. Founded in 2019 as a spin-off from IFAE, the University of Barcelona, and the Barcelona Supercomputing Center, QQT stands out as Spain’s first “full stack” quantum computing company with a focus on scalable, energy-efficient superconducting qubit systems. The company inaugurated Europe’s first multimodal Quantum Data Center, targeting up to 10 quantum computers, and offers quantum cloud services via its SpeQtrum platform. The investment, aligned with PERTE Chip’s strategy, underscores Spain’s ongoing commitment to building a robust quantum ecosystem, positioning QQT as a key player in 2025’s quantum landscape.

Leading quantum at an inflection point

MIT has announced the formation of the MIT Quantum Initiative (QMIT), spearheaded by faculty director Danna Freedman and backed by MIT President Sally Kornbluth as a strategic priority for 2025. QMIT aims to unite researchers and industry experts to drive quantum breakthroughs in areas such as security, encryption, navigation, astronomy, and materials science. The initiative will collaborate closely with MIT Lincoln Laboratory and the U.S. government, focusing on quantum hardware, rapid prototyping, and national security. QMIT will launch formally on Dec. 8 and eventually establish a dedicated campus hub. As Freedman states, “We are going to figure out what real problems exist that we could approach with quantum tools, and work toward them in the next five years.”

HPE Forms Quantum Computing Consortium To Develop Supercomputer

On November 10, 2025, Hewlett Packard Enterprise (HPE) announced the formation of the Quantum Scaling Alliance—a consortium including Applied Materials, Qolab, Quantum Machines, Riverlane, Synopsys, the University of Wisconsin, and 1QBit—aimed at developing a “practically useful and cost-effective quantum supercomputer.” HPE’s High Performance Computing division, which includes Cray Research, targets hybrid approaches, echoing industry expectations that traditional and quantum supercomputers will coexist. Nvidia, collaborating with consortium member Quantum Machines, is developing software for both architectures. HPE stock rose over 2% to 23.94 on the news and is up 11% in 2025. Fiscal 2026 adjusted earnings are projected at $2.20–$2.40 per share.

POET Technologies and Quantum Computing Inc. to Co-Develop 3.2 Tbps Optical Engines for CPO and Next-Gen AI Connectivity

POET Technologies and Quantum Computing Inc. announced a partnership to co-develop 3.2 Tbps optical engines for co-packaged optics (CPO) and next-generation AI connectivity. The collaboration aims to advance ultra-high-speed data transmission, which is significant for the performance and scalability of quantum computing and AI systems in 2025. By integrating POET’s photonic integration expertise with Quantum Computing Inc.’s quantum technology, the firms intend to address growing bandwidth demands. The development targets critical infrastructure for data centers and high-performance computing environments, where rapid data movement underpins quantum computing workloads.

Microsoft opens state-of-the-art Quantum Lab in Lyngby, Denmark, accelerating progress toward scalable quantum computing

Microsoft has expanded its Quantum Lab in Lyngby, Denmark, making it the company’s largest quantum site and bringing total quantum investments in Denmark to over DKK 1 billion. The facility will focus on scaling topological qubits at the heart of Microsoft’s “Majorana 1” chip—the world’s first quantum processing unit powered by a topological core—marking a significant advance in error-resilient quantum architectures. Collaboration with Danish institutions like the Niels Bohr Institute and DTU underpins Microsoft’s development of manufacturable quantum technology. The new facility, one of the first AI-enabled hardware labs globally, strengthens Denmark’s role as a quantum hub. Additionally, Microsoft will provide software and integration to Magne, a next-generation quantum computer being developed in partnership with Atom Computing and expected operational by 2026.

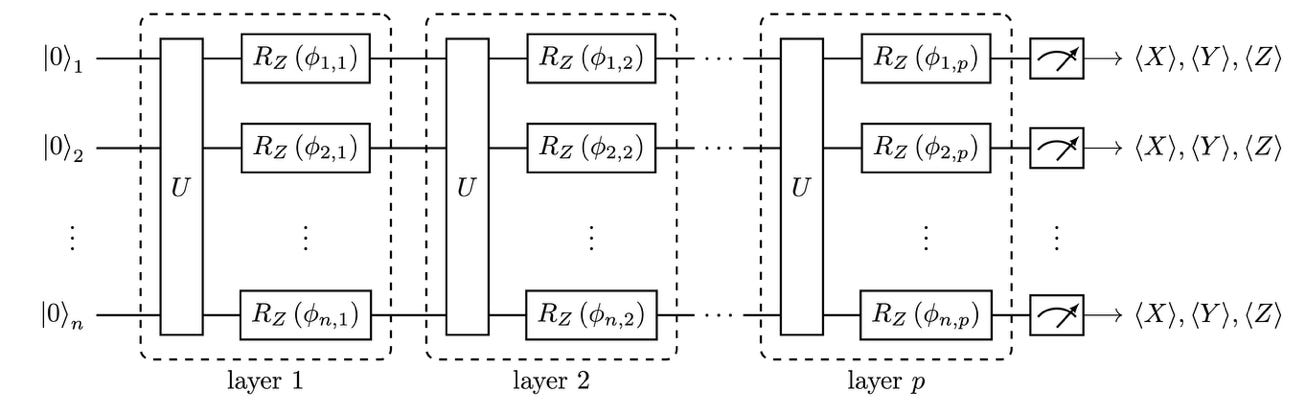

Exact simulation of Quantum Enhanced Signature Kernels for financial data streams prediction using Amazon Braket

A consortium including Rigetti Computing, Imperial College London, Standard Chartered, and AWS investigated quantum enhanced signature kernels for predicting mid-price movements in financial Limit Order Book (LOB) data using Amazon Braket’s SV1 simulator. Supported by an Innovate UK grant, the team tested quantum feature maps on the public FI-2010 dataset (LOB data for 5 NASDAQ OMX Nordic stocks) for multi-class classification. The study simulated circuits of up to 32 qubits and found that reducing features from 40 to as low as 12 had only minor effects on model performance.

IBM unveils updated quantum computing products

IBM has introduced two new quantum computing products: the Nighthawk quantum processing unit with 120 qubits connected via 218 tunable couplers, capable of handling up to 5,000 two-qubit gates (expected to rise to 7,500 by 2026 and 10,000 by 2027), and the Loon lattice architecture, featuring longer couplers for improved qubit connectivity and reliability, aiming for fault-tolerant quantum computing by 2029. IBM also announced an upgraded Qiskit library with a C++ interface for better integration with high-performance classical computing. These launches, detailed by IBM’s Jay Gambetta, mark progress towards scalable, fault-tolerant quantum systems and have garnered federal attention, including participation in DARPA’s Quantum Benchmarking Initiative.

Scaling for quantum advantage and beyond

At the Quantum Developer Conference 2025, IBM showcased significant progress in scaling quantum advantage through advances in hardware, software, and collaborative validation. The newly announced 120-qubit IBM Quantum Nighthawk features a square qubit topology, increasing couplers from 176 (Heron) to 218 and supporting circuits 30% more complex, targeting 5,000-gate runs by year-end. Heron’s third revision achieves median two-qubit gate errors below 1 in 1,000 for 57/176 couplings and a quantum processing efficiency of 330,000 CLOPS. Qiskit SDK v2.2 is now 83x faster at circuit transpilation than Tket 2.6.0. IBM, Flatiron, BlueQubit, and Algorithmiq launched an open Quantum Advantage Tracker to rigorously validate advantage claims, emphasizing the need for improved hardware and systematic proof before declaring quantum advantage achieved.

IBM says ‘Loon’ chip shows path to useful quantum computers by 2029

IBM has announced its new “Loon” chip, positioning it as a significant step toward functional quantum computers by 2029. The company claims the Loon chip, detailed in its latest update, demonstrates scalable error correction—a key challenge in quantum computing—by integrating both classical and quantum processing components. IBM’s roadmap outlines progression toward a million-qubit system, substantial for running complex, error-tolerant algorithms. The announcement underlines IBM’s ongoing commitment to practical quantum computing advancements, focusing on solving real-world problems previously considered beyond the reach of classical systems. In 2025, this marks a notable demonstration of concrete progress, as error correction and scaling remain persistent hurdles in the field.

A new quantum toolkit for optimization

Google Quantum AI, in collaboration with Stanford, MIT, and Caltech, has published research in *Nature* introducing Decoded Quantum Interferometry (DQI), a quantum algorithm that leverages quantum interference and established decoding techniques to address hard optimization problems. The DQI approach transforms certain optimization challenges—such as “optimal polynomial intersection” (OPI), a problem with applications in data science and cryptography—into decoding problems like those encountered with Reed-Solomon codes. While specialized classical algorithms exist, many variants remain intractable for conventional computers. The research highlights that large-scale, error-corrected quantum computers using DQI could efficiently approximate solutions to problems previously thought unsolvable by any classical method, advancing the role of quantum computation in meaningful real-world tasks as of 2025.

Google’s framework for developing quantum applications

On November 13, 2025, Google introduced a five-stage framework to guide the advancement of quantum computing applications, detailed in their paper “The Grand Challenge of Quantum Applications.” Ryan Babbush, Director of Research, Quantum Algorithms and Applications at Google, outlined these stages: Discovery, Identifying Problem Instances, Establishing Real-World Advantage, Engineering for Use, and (unlisted in excerpt) final deployment. Google’s high-performance Willow chip underscores recent hardware progress, with the next milestone focused on achieving a long-lived logical qubit. The framework aims to address the ongoing challenge of translating theoretical quantum advantage into tangible real-world impact, especially as the field faces difficulties with finding significant, relevant use cases where quantum computers outperform classical methods.

Haiqu demonstrates improved anomaly detection and pattern recognition with ibm quantum computer

Haiqu has demonstrated enhanced anomaly detection and pattern recognition capabilities on an IBM quantum computer. The tests underscore quantum hardware’s evolving ability to address complex analytical tasks, which are foundational in sectors like finance and cybersecurity. While details on the quantum system’s scale and the specific metrics of improvement were not provided, this collaboration highlights practical progress beyond simulated environments.

Will quantum computing be chemistry’s next AI?

At the 2025 Quantum World Congress, quantum computing drew significant attention as an emerging technology for chemistry, yet it “has yet to outperform their classical counterparts.” Panelists including Alán Aspuru-Guzik compared quantum’s development to AI, noting a possible decades-long path from investment to practical use. Despite billion-dollar investments this year, experts cited persistent hardware and software challenges, with “applications important to industry” projected to require “millions of qubits.”

Paper: Beyond Penrose tensor diagrams with the ZX calculus: Applications to quantum computing, quantum machine learning, condensed matter physics, and quantum gravity

Quanlong Wang, Richard D. P. East, Razin A. Shaikh, Lia Yeh, Boldizsár Poór, and Bob Coecke introduce the Spin-ZX calculus, extending Penrose’s tensor diagrams into a complete diagrammatic language tailored for quantum computing and SU(2) systems. Their framework unifies and visualizes computations in areas ranging from permutational quantum computing and quantum machine learning to condensed matter physics and quantum gravity. Notably, the Spin-ZX calculus enables diagrammatic derivation of key spin coupling objects like Clebsch-Gordan coefficients and spin Hamiltonians. Embedding Spin-ZX within the mixed-dimensional ZX calculus—already complete for finite-dimensional Hilbert spaces—they offer new graphical tools and potential algorithms for quantum technologies.

Quantum chip gives China’s AI data centres 1,000-fold speed boost

A new optical quantum chip developed by the Chip Hub for Integrated Photonics Xplore (CHIPX) at Shanghai Jiao Tong University and Turing Quantum has delivered a claimed “over a thousandfold” acceleration in complex problem-solving for AI data centres, according to its developers. The photonic quantum chip, which integrates photons and electronics at the chip level and supports wafer-scale mass production, won the “Leading Technology Award” at the 2025 World Internet Conference Wuzhen Summit, one of 17 achievements from over 400 contenders globally. Already deployed in aerospace, biomedicine, and finance, the chip reportedly provides “computing power support exceeding the limit of classical computers.” Professor Jin Xianmin described the integration approach as possibly “a world first.” China is showcasing significant momentum in quantum hardware applications this year.

Why Harvest Now, Decrypt Late may not be your main concern

Jaime Gómez García, Global Head of Santander Quantum Threat Program and Chair of Europol Quantum Safe Financial Forum, challenges the widespread focus on the “Harvest Now, Decrypt Later” (HNDL) threat in quantum computing for most industries in 2025. Gómez García argues HNDL is expensive, inefficient, and typically relevant only for sectors demanding long-term confidentiality, such as defence or diplomacy. For commercial organizations, the risk and impact of HNDL are minor compared to threats like “Trust Now, Forge Later” (TNFL), which targets digital signatures and authentication, posing immediate and widespread risks if quantum computers crack public keys or Certificate Authority roots. He recommends prioritizing the elimination of obsolete cryptography (“Harvest Now, Decrypt Now”) over rushing to deploy post-quantum cryptography.

Harvard’s 448-Atom Quantum Computer Achieves Fault-Tolerant Milestone

A Harvard-led team has announced a breakthrough with a 448-atom neutral-rubidium quantum processor capable of scalable, fault-tolerant quantum computation. For the first time, key ingredients for error correction—where adding physical qubits reduces logical error rates—were demonstrated within a single, integrated system. Senior author Mikhail Lukin called these “the most advanced” experiments yet, and Dolev Bluvstein emphasized the system’s “fault tolerant” and scalable design. Hartmut Neven from Google Quantum AI praised the achievement as “a significant advance.” Notably, the system’s innovations include non-destructive qubit readout and rapid cycle rates, allowing complex circuits over 1 second. While still distant from breaking current encryption, this milestone raises the profile of neutral-atom platforms in 2025’s competitive quantum computing landscape.

Regarding this week's quantum update, it's impressive to see so many alliances forming. Do you think this push for collaboration will truly accelrate finding those "real problems" for quantum, or are there new challenges ahead? Your summaries always offer such a clear and insightful perspctive.

HPE's Quantum Scaling Aliance forming with major players like Nvidia makes sense given the complxity of building hybrid quantum supercomputers. The parnership approach seems more pragmatic than trying to build everything in-house. Also intresting how the CUNQA emulator focuses on HPC applications, that's where we'll probably see practical value first before quantum achievs broader commerical viability.